New Synthetic Intelligence Technique Allows Devices That See the Planet More Like Humans Do

7 min read

Table of Contents

A new “common-sense” solution to laptop or computer vision permits synthetic intelligence that interprets scenes far more properly than other methods do.

Laptop eyesight methods at times make inferences about a scene that fly in the deal with of popular feeling. For instance, if a robot were processing a scene of a dinner desk, it may possibly entirely disregard a bowl that is noticeable to any human observer, estimate that a plate is floating above the desk, or misperceive a fork to be penetrating a bowl rather than leaning versus it.

Transfer that pc eyesight technique to a self-driving car or truck and the stakes develop into substantially higher — for case in point, such units have unsuccessful to detect unexpected emergency cars and pedestrians crossing the street.

To overcome these glitches, MIT researchers have designed a framework that aids equipment see the globe a lot more like people do. Their new artificial intelligence method for analyzing scenes learns to perceive true-world objects from just a number of illustrations or photos, and perceives scenes in conditions of these discovered objects.

The scientists constructed the framework working with probabilistic programming, an AI solution that allows the technique to cross-examine detected objects from input information, to see if the visuals recorded from a camera are a likely match to any candidate scene. Probabilistic inference enables the procedure to infer whether mismatches are possible because of to sound or to mistakes in the scene interpretation that will need to be corrected by further more processing.

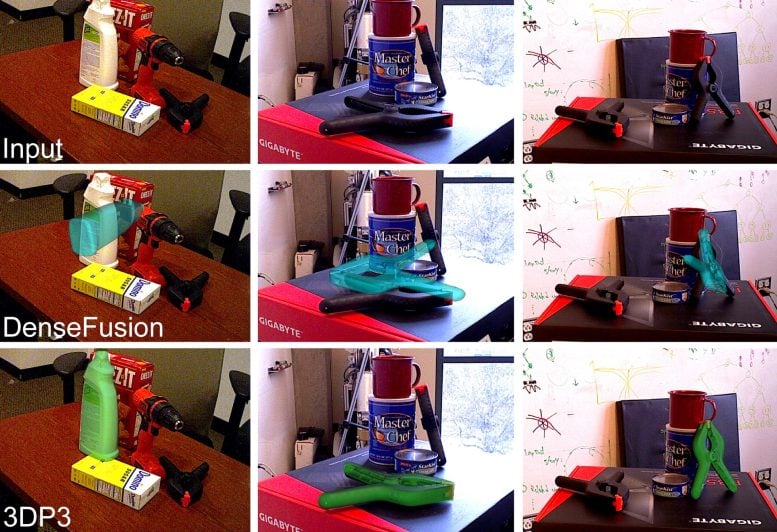

This graphic shows how 3DP3 (bottom row) infers a lot more accurate pose estimates of objects from enter images (best row) than deep learning techniques (center row). Credit: Courtesy of the scientists

This widespread-perception safeguard enables the system to detect and correct a lot of faults that plague the “deep-learning” ways that have also been used for computer vision. Probabilistic programming also would make it possible to infer possible make contact with associations involving objects in the scene, and use prevalent-sense reasoning about these contacts to infer additional exact positions for objects.

“If you never know about the get in touch with interactions, then you could say that an item is floating previously mentioned the table — that would be a valid rationalization. As people, it is apparent to us that this is physically unrealistic and the object resting on prime of the table is a far more very likely pose of the object. For the reason that our reasoning system is aware of this kind of knowledge, it can infer far more accurate poses. That is a essential perception of this function,” says direct author Nishad Gothoskar, an electrical engineering and personal computer science (EECS) PhD university student with the Probabilistic Computing Job.

In addition to enhancing the security of self-driving cars, this function could improve the performance of laptop or computer notion units that must interpret complex preparations of objects, like a robot tasked with cleaning a cluttered kitchen.

Gothoskar’s co-authors include things like new EECS PhD graduate Marco Cusumano-Towner study engineer Ben Zinberg viewing scholar Matin Ghavamizadeh Falk Pollok, a software program engineer in the MIT-IBM Watson AI Lab current EECS master’s graduate Austin Garrett Dan Gutfreund, a principal investigator in the MIT-IBM Watson AI Lab Joshua B. Tenenbaum, the Paul E. Newton Vocation Enhancement Professor of Cognitive Science and Computation in the Section of Brain and Cognitive Sciences (BCS) and a member of the Personal computer Science and Artificial Intelligence Laboratory and senior creator Vikash K. Mansinghka, principal study scientist and leader of the Probabilistic Computing Venture in BCS. The investigate is being presented at the Convention on Neural Data Processing Devices in December.

A blast from the previous

To establish the program, called “3D Scene Notion through Probabilistic Programming (3DP3),” the scientists drew on a notion from the early times of AI exploration, which is that laptop or computer eyesight can be considered of as the “inverse” of laptop graphics.

Laptop or computer graphics focuses on producing photographs based mostly on the illustration of a scene laptop or computer vision can be witnessed as the inverse of this system. Gothoskar and his collaborators created this system extra learnable and scalable by incorporating it into a framework designed working with probabilistic programming.

“Probabilistic programming enables us to publish down our awareness about some areas of the planet in a way a pc can interpret, but at the similar time, it allows us to categorical what we never know, the uncertainty. So, the system is in a position to mechanically master from knowledge and also routinely detect when the rules never maintain,” Cusumano-Towner describes.

In this scenario, the product is encoded with prior knowledge about 3D scenes. For occasion, 3DP3 “knows” that scenes are composed of various objects, and that these objects typically lay flat on top rated of every single other — but they may well not often be in these types of very simple relationships. This permits the product to purpose about a scene with additional widespread feeling.

Understanding shapes and scenes

To review an graphic of a scene, 3DP3 very first learns about the objects in that scene. After becoming proven only 5 photographs of an item, every single taken from a various angle, 3DP3 learns the object’s form and estimates the quantity it would occupy in house.

“If I present you an object from 5 various views, you can establish a rather fantastic illustration of that item. You’d realize its color, its condition, and you’d be ready to understand that object in numerous different scenes,” Gothoskar states.

Mansinghka adds, “This is way a lot less details than deep-learning approaches. For illustration, the Dense Fusion neural item detection method demands hundreds of education examples for each and every item sort. In distinction, 3DP3 only involves a several illustrations or photos for every item, and experiences uncertainty about the elements of each and every objects’ condition that it doesn’t know.”

The 3DP3 method generates a graph to characterize the scene, in which each and every object is a node and the lines that hook up the nodes reveal which objects are in contact with a person a further. This permits 3DP3 to generate a additional precise estimation of how the objects are arranged. (Deep-learning strategies depend on depth images to estimate item poses, but these techniques don’t generate a graph construction of get hold of interactions, so their estimations are significantly less correct.)

Outperforming baseline designs

The researchers when compared 3DP3 with several deep-finding out methods, all tasked with estimating the poses of 3D objects in a scene.

In practically all instances, 3DP3 produced additional exact poses than other models and carried out much superior when some objects ended up partially obstructing other individuals. And 3DP3 only desired to see five visuals of each individual object, whilst just about every of the baseline versions it outperformed essential thousands of pictures for education.

When employed in conjunction with one more product, 3DP3 was ready to strengthen its accuracy. For occasion, a deep-understanding design might predict that a bowl is floating a bit above a desk, but because 3DP3 has expertise of the get in touch with interactions and can see that this is an unlikely configuration, it is equipped to make a correction by aligning the bowl with the desk.

“I found it astonishing to see how large the glitches from deep finding out could sometimes be — producing scene representations wherever objects truly did not match with what people would perceive. I also identified it surprising that only a minimal bit of design-centered inference in our causal probabilistic method was sufficient to detect and take care of these glitches. Of study course, there is nevertheless a very long way to go to make it quick and sturdy plenty of for complicated real-time eyesight units — but for the first time, we’re viewing probabilistic programming and structured causal designs bettering robustness about deep mastering on tough 3D eyesight benchmarks,” Mansinghka suggests.

In the upcoming, the scientists would like to force the procedure even more so it can study about an item from a one graphic, or a solitary body in a motion picture, and then be capable to detect that object robustly in unique scenes. They would also like to discover the use of 3DP3 to obtain training info for a neural community. It is often challenging for individuals to manually label visuals with 3D geometry, so 3DP3 could be applied to make more elaborate image labels.

The 3DP3 technique “combines small-fidelity graphics modeling with widespread-feeling reasoning to proper significant scene interpretation glitches created by deep discovering neural nets. This form of solution could have wide applicability as it addresses essential failure modes of deep understanding. The MIT researchers’ accomplishment also reveals how probabilistic programming engineering formerly developed beneath DARPA’s Probabilistic Programming for Advancing Device Learning (PPAML) system can be used to remedy central issues of common-sense AI less than DARPA’s present Machine Popular Perception (MCS) software,” says Matt Turek, DARPA Plan Manager for the Device Typical Feeling Application, who was not concerned in this investigation, though the application partially funded the research.

Reference: “3DP3: 3D Scene Perception by means of Probabilistic Programming” by Nishad Gothoskar, Marco Cusumano-Towner, Ben Zinberg, Matin Ghavamizadeh, Falk Pollok, Austin Garrett, Joshua B. Tenenbaum, Dan Gutfreund and Vikash K. Mansinghka, 30 October 2021, Personal computer Science > Personal computer Eyesight and Sample Recognition.

arXiv:2111.00312

Extra funders contain the Singapore Defense Science and Technology Company collaboration with the MIT Schwarzman College of Computing, Intel’s Probabilistic Computing Middle, the MIT-IBM Watson AI Lab, the Aphorism Basis, and the Siegel Relatives Foundation.