High-accuracy model recognition method of mobile device based on weighted feature similarity

Table of Contents

In this section, we will introduce the principles and steps of the Recognizer in detail.

Symbol description

f: feature. There are three kinds of feature: extracted feature fe, brand feature fb and model feature fm. Among them, fe is the feature extracted from the public attributes of the device, fb is the brand feature selected from the extracted features using the RFBR algorithm, and fm is the model feature selected from the extracted features using the RFMR algorithm. The general representations of the ith extracted feature, brand feature, and model feature are \(f_{{\text{e}}}^{{\left( { * ,i} \right)}}\), \(f_{{\text{b}}}^{{\left( { * ,i} \right)}}\), and \(f_{{\text{m}}}^{{\left( { * ,i} \right)}}\). For the device Di, the ith extracted feature, ith brand feature and ith model feature are denoted as \(f_{{\text{e}}}^{{\left( {D_{i} ,i} \right)}}\), \(f_{{\text{b}}}^{{\left( {D_{i} ,i} \right)}}\) and \(f_{{\text{m}}}^{{\left( {D_{i} ,i} \right)}}\) respectively.

F: feature vector. There are three kinds of feature vector: extraction feature vector Fe, brand feature vector Fb and model feature vector Fm. Among them, Fe is a vector composed of extracted features fe, \({\mathbf{F}}_{{\text{e}}} = \left[ {f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} , \ldots } \right]\). Fb is a vector composed of brand features fb, \({\mathbf{F}}_{{\text{b}}} = \left[ {f_{{\text{b}}}^{{\left( { * ,1} \right)}} ,f_{{\text{b}}}^{{\left( { * ,2} \right)}} , \ldots } \right]\). Fm is a vector composed of model features fm, \({\mathbf{F}}_{{\text{m}}} = \left[ {f_{{\text{m}}}^{{\left( { * ,1} \right)}} ,f_{{\text{m}}}^{{\left( { * ,2} \right)}} , \ldots } \right]\). For the device Di, the ith extracted feature vector, ith brand feature vector and ith model feature vector are denoted as \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}}\) (\({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} = \left[ {f_{{\text{e}}}^{{\left( {D_{i} ,1} \right)}} ,f_{{\text{e}}}^{{\left( {D_{i} ,2} \right)}} , \ldots } \right]\)), \({\mathbf{F}}_{{\text{b}}}^{{\left( {D_{i} } \right)}}\) (\({\mathbf{F}}_{{\text{b}}}^{{\left( {D_{i} } \right)}} = \left[ {f_{{\text{b}}}^{{\left( {D_{i} ,1} \right)}} ,f_{{\text{b}}}^{{\left( {D_{i} ,2} \right)}} , \ldots } \right]\)) and \({\mathbf{F}}_{{\text{m}}}^{{\left( {D_{i} } \right)}}\) (\({\mathbf{F}}_{{\text{m}}}^{{\left( {D_{i} } \right)}} = \left[ {f_{{\text{m}}}^{{\left( {D_{i} ,1} \right)}} ,f_{{\text{m}}}^{{\left( {D_{i} ,2} \right)}} , \ldots } \right]\)) respectively.

\(S\left( {f^{{\left( {D_{i} ,k} \right)}} ,f^{{\left( {D_{j} ,k} \right)}} } \right)\): similarity function between two device features, \(0 \le S\left( {f^{{\left( {D_{i} ,k} \right)}} ,f^{{\left( {D_{j} ,k} \right)}} } \right) \le 1\). The similarity functions of the kth extracted feature, brand feature and model feature of device Di and Dj are denoted as \(S\left( {f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} ,f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} } \right)\), \(S\left( {f_{{\text{b}}}^{{\left( {D_{i} ,k} \right)}} ,f_{{\text{b}}}^{{\left( {D_{j} ,k} \right)}} } \right)\) and \(S\left( {f_{{\text{m}}}^{{\left( {D_{i} ,k} \right)}} ,f_{{\text{m}}}^{{\left( {D_{j} ,k} \right)}} } \right)\).

\({\mathbf{S}}\left( {{\mathbf{F}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}^{{\left( {D_{i} } \right)}} } \right)\): similarity function vector. \({\mathbf{S}}\left( {{\mathbf{F}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}^{{\left( {D_{i} } \right)}} } \right) = \left[ {S\left( {f^{{\left( {D_{i} ,1} \right)}} ,f^{{\left( {D_{j} ,1} \right)}} } \right),S\left( {f^{{\left( {D_{i} ,2} \right)}} ,f^{{\left( {D_{j} ,2} \right)}} } \right), \ldots } \right]\). The similarity function vectors of the extracted feature vector, brand feature vector and model feature vector of device Di and Dj are denoted as \({\mathbf{S}}\left( {{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)}} } \right)\), \({\mathbf{S}}\left( {{\mathbf{F}}_{{\text{b}}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}_{{\text{b}}}^{{\left( {D_{j} } \right)}} } \right)\) and \({\mathbf{S}}\left( {{\mathbf{F}}_{{\text{m}}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}_{{\text{m}}}^{{\left( {D_{j} } \right)}} } \right)\).

\({\mathbf{F}}\backslash f^{{\left( { * ,i} \right)}}\): result of removing \(f^{{\left( { * ,i} \right)}}\) from the feature vector F. For the extracted feature vector \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}}\) of device Di, if \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} = \left[ {f_{{\text{e}}}^{{\left( {D_{i} ,1} \right)}} ,f_{{\text{e}}}^{{\left( {D_{i} ,2} \right)}} ,f_{{\text{e}}}^{{\left( {D_{i} ,3} \right)}} } \right]\), then \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{i} ,2} \right)}} = \left[ {f_{{\text{e}}}^{{\left( {D_{i} ,1} \right)}} ,f_{{\text{e}}}^{{\left( {D_{i} ,3} \right)}} } \right]\).

Ba: collection of all devices whose brand is a, \(B_{{\text{a}}} = \left\{ {D_{1} ,D_{2} , \ldots } \right\}\). \(\left| {B_{{\text{a}}} } \right|\) is the number of elements in Ba.

B: Collection of all device brands, \({\mathbf{B}} = \left\{ {B_{{\text{a}}} ,B_{{\text{b}}} , \ldots } \right\}\). M is the size of B, \(M = \left| {\mathbf{B}} \right|\).

\({\mathbf{B}} – \left\{ {B_{i} } \right\}\): result of removing Bi from B. if \({\mathbf{B}} = \left\{ {B_{{\text{a}}} ,B_{{\text{b}}} ,B_{{\text{c}}} } \right\}\), then \({\mathbf{B}} – \left\{ {B_{{\text{b}}} } \right\} = \left\{ {B_{{\text{a}}} ,B_{{\text{c}}} } \right\}\).

\(\overrightarrow {(t)}\): this is a t-dimensional row vector, and each value in the vector is 1/t. For example, \(\overrightarrow {(2)} = [0.5,0.5]\).

\(\min \left| {(a – b),\varepsilon } \right|\): minimum of \(\left| {a – b} \right|\), \(\left| {a – b + \varepsilon } \right|\), \(\left| {a – b – \varepsilon } \right|\).

Principles and steps of Recognizer

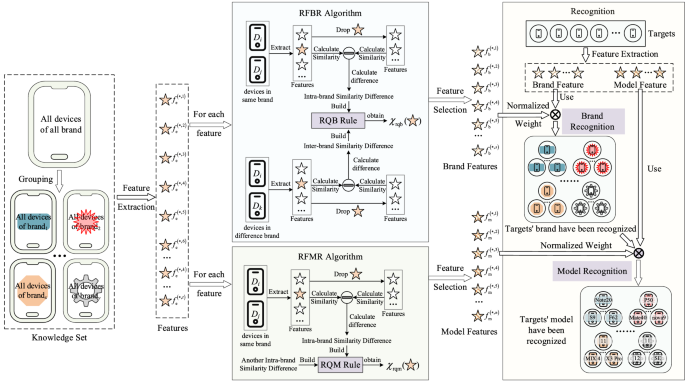

Recognizer first extracts the common attributes of all mobile devices as features, and formulates similarity calculation rules according to the expression of extracted features. Then, we propose RFBR and RBMR strategies to assess the role of each feature in brand recognition and model recognition for feature selection and weight determination. Finally, Recognizer uses the target’s features to identify the brand and model. The framework of Recognizer is shown in Fig. 1.

The framework of Recognizer.

There are 7 steps in Recognizer, as follows:

-

Step 1 Group devices. All devices in the knowledge set are grouped by device brand. In each group, all devices’ brands are same.

-

Step 2 Extract feature. In each group, we extract the common attributes of all devices as brand attributes. If all devices in all groups own one attribute, this attribute will be as a feature.

-

Step 3 Calculate similarity between two features. According to the form of extracted feature, we divide the extracted features into numerical features and string features. For each feature form, we build the feature similarity calculation strategy.

-

Step 4 Select brand feature. Based on the effect of each feature on the similarity between same-brand devices and the similarity between devices with different brands, we propose RFBR strategy to quantify the importance of each feature in brand recognition, and the importance value is expressed as \({\chi }_{\mathrm{rqb}}\). Those features, whose \({\chi }_{\mathrm{rqb}}\) is greater than 0, will be selected as brand features. And the value of \({\chi }_{\mathrm{rqb}}\) is as the weight of brand feature.

-

Step 5 Select model feature. Because one model only corresponds to one mobile device, there is no similarity between devices with same model. So, it is unreasonable to use RFBR strategy for model feature selection. According to the effect of feature on same-brand devices and the difference of effect on all brands, we propose RFMR strategy to quantify the importance of each feature in model recognition, and the importance value is expressed as \({\chi }_{\mathrm{rqm}}\). Those features, whose \({\chi }_{\mathrm{rqm}}\) is greater than 0, are selected as model feature. And \({\chi }_{\mathrm{rqm}}\) is the weight of feature.

-

Step 6 Normalize weights. All weights of brand features obtained in Step 4 and all weights of model features obtained in Step 5 are normalized respectively.

-

Step 7 Recognize target’s model. We obtain brand features and model features from target mobile device. After recognizing the brand of target according to brand features and brand features’ weights, the model features and model features’ weights are used to identify the model of target.

Key steps of Recognizer

Among all steps of Recognizer, Step 3, 4, 5, 7 are key steps. These key steps are described in detail as follows.

-

(1)

Calculate similarity between two features.

We divide the extracted features into numerical features and string features. For a feature, if the feature value is a numeric value obtained by measurement tool and there is an inevitable measurable error due to the precision limitation of the measurement tool, the feature is a numeric feature (e.g., length); otherwise, the feature is a string feature (e.g., operating system).

We measure the similarity between two numerical features based on the difference value between two values, while the similarity between two string features is determined based on the inclusion relationship between two strings. Certainly, although a number can be considered as a string, it is not reasonable to calculate the similarity between two numerical features based on the inclusion relationship. For example, for two numeric features f1 (value is 1000) and f2 (value is 999), if f1 and f2 are regarded as string features, the similarity value between f1 and f2 is 0. Obviously, it is unreasonable. Therefore, for two types of features, we design two strategies to calculate the similarity between features, respectively, as follows.

-

(a)

Numerical feature similarity strategy

For numerical features, the smaller the difference in two feature values, the more similar the two features are. But, due to the error in measurement, there is a deviation between the measurement value and the actual value. So, considering the measurement error in numerical feature similarity strategy is more reasonable, which could reduce the effect of measurement error when calculating similarity between two numerical features. According to this, we define (1) and (2) as numerical feature similarity calculation rules.

If the kth extracted features of base device Di and target device Dj are one-dimensional numerical features, the similarity between \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) is calculated by (1).

$$S\left( {f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} ,f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} } \right) = \left\{ {\begin{array}{*{20}l} { – \frac{{\min \left| {\left( {\left| {f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} } \right| – \left| {f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} } \right|} \right),\varepsilon } \right|}}{{f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} }},} \hfill & {\left| {f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} } \right| \le 2\left| {f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} } \right|} \hfill \\ {0,} \hfill & {else} \hfill \\ \end{array} } \right.$$

(1)

In (1), \(\varepsilon\) is the measurement error threshold, and \(\left| {f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} } \right|\) is the absolute value of numerical feature.

If \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) are multi-dimensional numerical features, the dimensional similarity is calculated for the values in each dimension, and the feature similarity is the product of all dimensional similarities. If \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} = (v_{k,1}^{{\left( {D_{i} } \right)}} , \ldots ,v_{k,s}^{{\left( {D_{i} } \right)}} )\) and \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} = (v_{k,1}^{{\left( {D_{j} } \right)}} , \ldots v_{k,s}^{{\left( {D_{j} } \right)}} )\), the similarity between the target feature \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and base feature \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) is calculated using (2).

$$S\left( {f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} ,f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} } \right) = \prod\limits_{t = 1}^{s} {S\left( {v_{k,t}^{{\left( {D_{i} } \right)}} ,v_{k,t}^{{\left( {D_{j} } \right)}} } \right)}$$

(2)

When calculating the feature similarity according to (1) and (2), if \(\left| {f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} } \right| > 2\left| {f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} } \right|\) or \(\left| {v_{k,t}^{{\left( {D_{j} } \right)}} } \right| > 2\left| {v_{k,t}^{{\left( {D_{i} } \right)}} } \right|\), it indicates that the difference between two numerical feature values (or two values in a certain dimension) is too large. In this case, we think that the two numerical features are not similar, the feature similarity value is set as 0.

-

(b)

String feature similarity strategy

Since each string represents a specific meaning, each string is considered as a whole to calculate the similarity. In Recognizer, according to the number of strings in string feature, the string features are divided into single-string feature and multi-strings feature. We define (3) and (4) as string feature similarity rules.

If the kth extracted features \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) of devices Di and Dj are single-string features, we calculate the feature similarity between \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) according to (3).

$$S\left( {f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} ,f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} } \right) = \left\{ {\begin{array}{*{20}l} {1,} \hfill & {f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} = f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} } \hfill \\ {0.8,} \hfill & { \, f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} \in f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} \, or \, f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} \in f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} } \hfill \\ {0,} \hfill & {else} \hfill \\ \end{array} } \right.$$

(3)

When \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} \in f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) or \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} \in f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\), we think that the feature value is incomplete. In this case, the feature similarity value is set to 0.8 (this is an experience value).

If \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) are multi-strings features, we construct vector space with \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} \cup f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\), and vectorize \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\). At this time, the cosine similarity between two vectors is the similarity between two multi-strings features. For example, if \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} = \left\{ {str1,str2} \right\}\) and \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} = \left\{ {str2,str3} \right\}\), the vector space is \(\left\{ {str1,str2,str3} \right\}\). At this time, the vectorization result of \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) is \([1,1,0]\), and the vectorization result of \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) is [0, 1, 1]. We denote the vectorization results of \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) as \(V_{j,k}\) and \(V_{i,k}\) respectively, then the feature similarity between \(f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}}\) and \(f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}}\) is calculated by (4).

$$S\left( {f_{{\text{e}}}^{{\left( {D_{i} ,k} \right)}} ,f_{{\text{e}}}^{{\left( {D_{j} ,k} \right)}} } \right) = \frac{{V_{i,k} \cdot V_{j,k} }}{{\left| {V_{i,k} } \right| \cdot \left| {V_{j,k} } \right|}}$$

(4)

-

(2)

Select brand feature

In Recognizer, we use RFBR strategy for brand feature selection. So, we describe RFBR in detail here.

Assuming that \(f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} ,f_{{\text{e}}}^{{\left( { * ,3} \right)}} , \ldots ,f_{{\text{e}}}^{{\left( { * ,n} \right)}}\) are all extraction features, then extract the feature vector \({\mathbf{F}}_{{\text{e}}} = [f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} ,f_{{\text{e}}}^{{\left( { * ,3} \right)}} , \ldots ,f_{{\text{e}}}^{{\left( { * ,n} \right)}} ]\). For each extracted feature \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\), \(1 \le m \le n\), \({\mathbf{F}}_{{\text{e}}}^{\prime } = {\mathbf{F}}_{{\text{e}}} \backslash f_{{\text{e}}}^{{\left( { * ,m} \right)}}\), we calculate the mean of intra-brand similarity increments according to (5).

$$\varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right) = \frac{1}{M}\sum\limits_{k} {\frac{{\sum\limits_{i} {\sum\limits_{j} {{\mathbf{S}}\left( {{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)}} } \right)\overrightarrow {\left( n \right)}^{{\varvec{T}}} – {\mathbf{S}}\left( {{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)\prime }} ,{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)\prime }} } \right)\overrightarrow {{\left( {n – 1} \right)}}^{{\varvec{T}}} } } }}{{\left| {B_{k} } \right|^{2} }}}$$

(5)

In (5), \(D_{i} ,D_{j} \in B_{k}\), \(B_{k} \in {\varvec{B}}\), \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)\prime }} = {\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{i} ,m} \right)}}\), and \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)\prime }} = {\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{j} ,m} \right)}}\). Meanwhile, we calculate the mean of inter-brand similarity increments according to (6).

$$\delta \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right) = \frac{1}{M}\sum\limits_{k} {\frac{{\sum\limits_{l} {\sum\limits_{i} {{\mathbf{S}}\left( {{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{l} } \right)}} } \right)\overrightarrow {\left( n \right)}^{{\varvec{T}}} – {\mathbf{S}}\left( {{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)\prime }} ,{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{l} } \right)\prime }} } \right)\overrightarrow {{\left( {n – 1} \right)}}^{{\varvec{T}}} } } }}{{\left| {B_{k} } \right|\left| {{\varvec{B}} – \left\{ {B_{k} } \right\}} \right|}}}$$

(6)

In (6), \(D_{i} \in B_{k}\), \(B_{k} \in {\mathbf{B}}\), \(D_{l} \in {\varvec{B}} – \left\{ {B_{k} } \right\}\), \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)\prime }} = {\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{i} ,m} \right)}}\), and \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{l} } \right)\prime }} = {\mathbf{F}}_{{\text{e}}}^{{\left( {D_{l} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{l} ,m} \right)}}\). Due to \(0 \le S(f^{{\left( {D_{i} ,k} \right)}} ,f^{{\left( {D_{j} ,k} \right)}} ) \le 1\), according to (5) and (6), we can obtain (7).

$$- 1 \le \varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right),\delta \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right) \le 1$$

(7)

According to \(\varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right)\) and \(\delta \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right)\), we design a rule (we name it RQB rule, as 8) to quantifying the feature-differentiation in brand recognition to assess the role of \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) in brand recognition.

$$\chi_{{{\text{rqb}}}} \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right) = \left\{ {\begin{array}{*{20}l} {\alpha \varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right) – \left( {1 – \alpha } \right)\delta \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right),} \hfill & {\varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right) \ge 0} \hfill \\ {0,} \hfill & {\varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right) < 0} \hfill \\ \end{array} } \right.$$

(8)

In (8), \(\alpha\) is an adjustable parameter, \(\alpha \in [0,1]\). If \(\chi_{{{\text{rqb}}}} (f_{{\text{e}}}^{{\left( { * ,m} \right)}} ) > 0\), \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) will be selected as the brand feature, and the weight of \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) is \(\chi_{{{\text{rqb}}}} (f_{{\text{e}}}^{{\left( { * ,m} \right)}} )\).

-

(3)

Select model feature

In Recognizer, we use RFMR for model feature selection. So, we describe the process of RFMR in detail here.

Assuming that \(f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} ,f_{{\text{e}}}^{{\left( { * ,3} \right)}} , \ldots ,f_{{\text{e}}}^{{\left( { * ,n} \right)}}\) are all extraction features, then extract the feature vector \({\mathbf{F}}_{{\text{e}}} = [f_{{\text{e}}}^{{\left( { * ,1} \right)}} ,f_{{\text{e}}}^{{\left( { * ,2} \right)}} ,f_{{\text{e}}}^{{\left( { * ,3} \right)}} , \ldots f_{{\text{e}}}^{{\left( { * ,n} \right)}} ]\). For each extracted feature \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\), \(1 \le m \le n\), \({\mathbf{F}}_{{\text{e}}}^{\prime } = {\mathbf{F}}_{{\text{e}}} \backslash f_{{\text{e}}}^{{\left( { * ,m} \right)}}\), we calculate \(\varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right)\) according to (5), and calculate the incremental standard deviation of intra-brand similarity according to formula (9).

$$\left\{ {\begin{array}{*{20}l} {E\left( {B_{k} } \right) = \frac{{\sum\limits_{i} {\sum\limits_{j} {{\mathbf{S}}\left( {{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)}} } \right)\overrightarrow {\left( n \right)}^{\mathbf{T}} – {\mathbf{S}}\left( {{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)\prime }} ,{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)\prime }} } \right)\overrightarrow {{\left( {n – 1} \right)}}^{\mathbf{T}} } } }}{{\left| {B_{k} } \right|^{2} }}} \hfill \\ {\gamma \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right) = \frac{1}{M}\sum\limits_{k} {\left( {\frac{{\sum\limits_{i} {\sum\limits_{j} {\left( {{\mathbf{S}}\left( {{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} ,{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)}} } \right)\overrightarrow {\left( n \right)}^{\mathbf{T}} – {\mathbf{S}}\left( {{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)\prime }} ,{\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)\prime }} } \right)\overrightarrow {{\left( {n – 1} \right)}}^{\mathbf{T}} – E\left( {B_{k} } \right)} \right)^{2} } } }}{{\left| {B_{k} } \right|^{2} }}} \right)^{\frac{1}{2}} } } \hfill \\ \end{array} } \right.$$

(9)

In (9), \(D_{i} ,D_{j} \in B_{k}\), \(B_{k} \in {\mathbf{B}}\), \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)\prime }} = {\mathbf{F}}_{{\text{e}}}^{{\left( {D_{i} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{i} ,m} \right)}}\), \({\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)\prime }} = {\mathbf{F}}_{{\text{e}}}^{{\left( {D_{j} } \right)}} \backslash f_{{\text{e}}}^{{\left( {D_{j} ,m} \right)}}\).

According to \(\varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right)\) and \(\gamma \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right)\), we design a rule (we name it RQM rule, as 10) to quantifying the feature-differentiation in model recognition to assess the role of \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) in model recognition.

$$\chi_{{{\text{rqm}}}} \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right) = \left\{ {\begin{array}{*{20}l} {0,} \hfill & {\varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right) \ge 0} \hfill \\ { – \beta \varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right) – \left( {1 – \beta } \right)\gamma \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right),} \hfill & {\varphi \left( {f_{{\text{e}}}^{{\left( { * ,m} \right)}} } \right) < 0} \hfill \\ \end{array} } \right.$$

(10)

In (10), \(\beta\) is an adjustable parameter, \(\beta \in [0.5,1]\). If \(\chi_{{{\text{rqm}}}} (f_{{\text{e}}}^{{\left( { * ,m} \right)}} ) > 0\), \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) will be selected as the model feature, and the weight of \(f_{{\text{e}}}^{{\left( { * ,m} \right)}}\) is \(\chi_{{{\text{rqm}}}} (f_{{\text{e}}}^{{\left( { * ,m} \right)}} )\).

-

(4)

Recognize device type

In device type recognition, there are two parts: brand recognition and model recognition. We first perform brand recognition on the target device, and then perform model recognition.

In brand recognition, brand features and normalized weights are used in (11) to calculate the similarity between target device and known devices.

$$\Phi \left( {K_{i} ,T} \right) = {\mathbf{S}}\left( {{\mathbf{F}}_{{\text{b}}}^{{\left( {K_{i} } \right)}} ,{\mathbf{F}}_{{\text{b}}}^{\left( T \right)} } \right){\mathbf{W}}\left( {{\mathbf{F}}_{{\text{b}}} } \right)$$

(11)

In (11), T is the target device, Ki is one known device in the knowledge set, and \({\mathbf{W}}\left( {{\mathbf{F}}_{{\text{b}}} } \right)\) is the standardized weight vector of brand feature. In knowledge set, the brand of known device with the greatest similarity with target device is taken as the brand of target device. So as to realize brand recognition of the target device.

In model recognition, model features and normalized weights are used in (12) to calculate the similarity between target device and known devices. At this time, the brand of known devices is same with target device.

$$\Psi \left( {K_{i} ,T} \right) = {\mathbf{S}}\left( {{\mathbf{F}}_{{\text{m}}}^{{\left( {K_{i} } \right)}} ,{\mathbf{F}}_{{\text{m}}}^{\left( T \right)} } \right){\mathbf{W}}\left( {{\mathbf{F}}_{{\text{m}}} } \right)$$

(12)

In (12), T is the target device, Ki is one known device in the knowledge set (the brand of Ki is same with target device), and \({\mathbf{W}}\left( {{\mathbf{F}}_{{\text{m}}} } \right)\) is the standardized weight vector of model feature. The model of known device with the greatest similarity with target device is taken as the model of target device. So as to realize model recognition of the target device.